Track 3: All Weather Semantic Segmentation

Register for this track

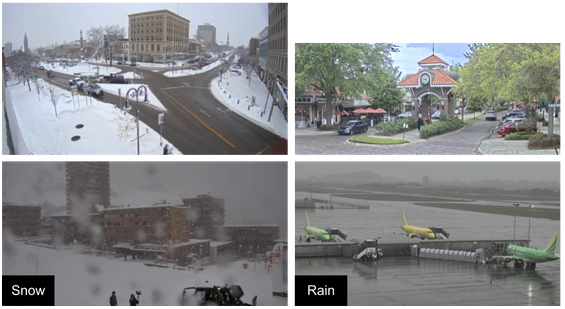

The WeatherProof Challenge invites the public to innovate solutions for the challenging task of semantic segmentation on images degraded by various degrees of weather from all around the world. The competition builds off of our previous dataset WeatherStream [1] comprised of weathered and deweathered images from over 174k pictures. With the new dataset WeatherProof [2], we now provide a dataset of both weathered and deweathered images that have semantic segmentation labels.

Semantic segmentation has rich history in countless applications in autonomous driving, robotics, and scene understanding. The current pace of advancements has been accelerated by the introduction of large, generally-pretrained, foundational models. In fact, these foundational models consistently dominate the leaderboards of competitive semantic segmentation benchmarks, i.e. ADE20K, Cityscapes, by placing in the top 5 rankings. Yet, despite their success on these leaderboards, when presented with images with visual degradations, i.e., those captured under adverse conditions, their performance similarly degrades.

Existing work has provided datasets and methods with this goal of studying the effects of these natural phenomenons. However, as it is difficult to capture paired datasets to study them in a controlled setting, existing datasets have resorted to the use of synthetic weather effects, or include misalignments in the underlying scene between degraded and clear-weather images. To address this, we build off of the WeatherStream dataset to introduce the WeatherProof Dataset, the first semantic segmentation dataset with accurately paired clear and weather-degraded image pairs.

The aim of this competition is to study the weather phenomenon in real world scenarios and to spark novel ideas that will further enhance the development of semantic segmentation methods on weathered real images. As part of the competition, each team must register on the competition website and must use the WeatherProof Dataset to train their models. Teams can also (if they choose) use the WeatherStream Dataset which is still paired and larger, but does not include semantic segmentation label for self-supervised and unsupervised training methods. Teams must submit an arXiv Paper which includes author(s) name and affiliation, method (and a method title), datasets used, and source of data (real, pseudo-real, synthetic), and hardware and email the link to WeatherProofChallenge@gmail.com to be considered. Teams are open to use additional datasets to push performance, but must include details of them in the arXiv paper.

The competition will be held in phases where the training, validation (dry-run) and testing sets will be released.

References:

[1] Howard Zhang, Yunhao Ba, Ethan Yang, Varan Mehra, Blake Gella, Akira Suzuki, Arnold Pfahnl, Chethan Chinder Chandrappa, Alex Wong, and Achuta Kadambi. Weatherstream: Light transport automation of single image deweathering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 13499–13509, 2023.

[2] Blake Gella, Howard Zhang, Rishi Upadhyay, Tiffany Chang, Matthew Waliman, Yunhao Ba, Alex Wong, and Achuta Kadambi. "WeatherProof: A Paired-Dataset Approach to Semantic Segmentation in Adverse Weather." arXiv preprint arXiv:2312.09534 (2023).

Footer