Track 4: 3D reconstruction from low light/smoky videos

Register for this track

Computer vision in low visibility is a problem that faces many challenges and remains largely open. Visibility can be defined in various ways, such as in the case of low-light illumination or in the presence of amplitude scattering. With respect to low-light, Samsung, Google, and Apple are in constant competition aimed at providing the best low-light solution in the case of smartphone cameras. Amplitude scattering also provides a unique challenge in visibility because of the amount of ambient light present in the scene which is depth-dependent. In the case of self-driving cars or commercial UAVs, the ability of an optical system to see “through” the haze or fog present in real-world imaging is critical for making decisions which have significant impact on safety. In the case of handheld devices and small-to-moderately sized UAVs, the consideration of efficiency is a concern, both for reasons of computational capabilities on board as well as energy consumption.

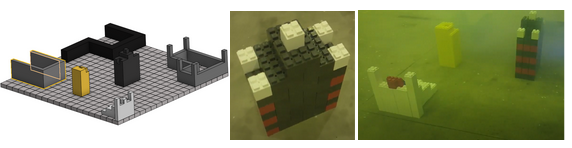

This challenge provides participants with videos captured in an environment filled with artificial fog. In particular, the videos captured in this challenge are from UAVs navigating the foggy environment. The structures are created purposefully using Lego blocks based on three-dimensional CAD (computer-aided design) models. We show an example of a particular CAD model in Figure 8. These structures may be placed together in a scene or as standalone objects with irregular shapes. Participants will be asked to reconstruct the three-dimensional representation of the object(s) in foggy videos captured by a UAV. We additionally visualize two captures from the UAV in Figure 8. The fog conditions are varied across captures along with the positioning of the UAV changing – this creates the observed degradation by the fog to be varying across the time of the capture.

The contestants' solutions will be evaluated in two criteria: (1) accuracy and (2) execution time. The first measures whether the structures are correctly represented by the methods contributed by participants. This can be evaluated by virtue of ground truth available by CAD modeling. The second criteria aims to reflect the limitation of computational resources and energy efficiency faced by a device deployed in the real world, such as when the computer vision program needs to be executed on a UAV’s onboard processor. To measure the execution time, the contestants' programs will run on an embedded computer (Nvidia Jetson). The utility of the Jetson is that it offers an edge-device-like system with similar CUDA-related capabilities as a standard GPU. This makes overhead in moving research-level code to an edge device minimal.

Submission criteria of this challenge require all participants to submit a fact sheet detailing their method, datasets used, runtime etc. The fact sheets will be compiled into a final report post challenge to highlight trends and innovative techniques. Participants are encouraged to submit manuscripts detailing their method to the workshop.

Footer